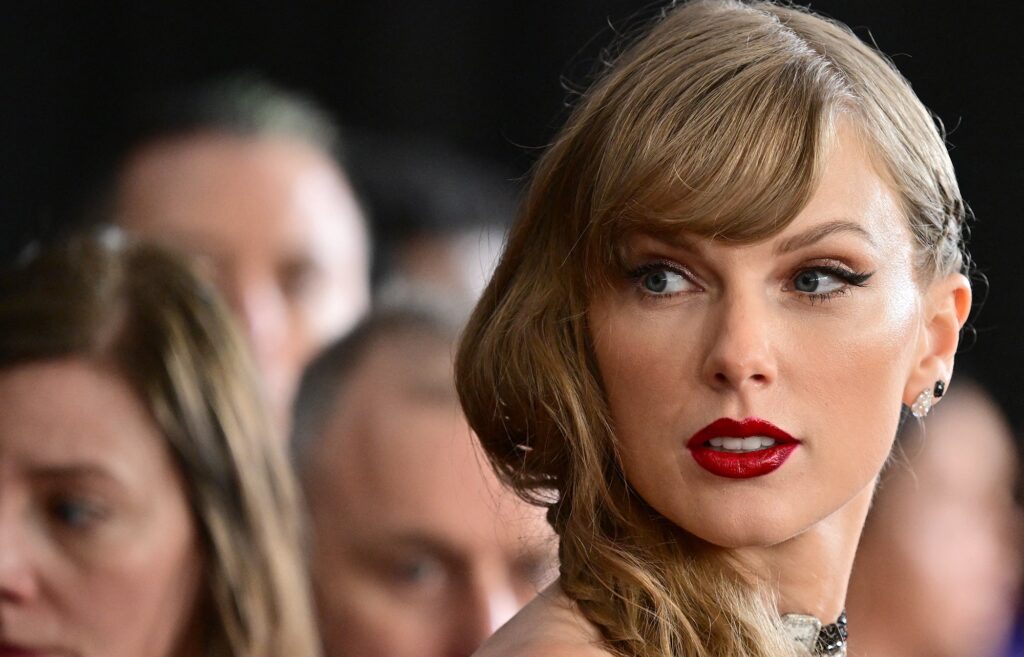

For Taylor Swift, the last few months of 2023 were triumphant. Her Eras Tour was named the highest-grossing concert tour of all time. She debuted an accompanying concert film that breathed new life into the genre. And to cap it off, Time magazine named her Person of the Year.

But in late January the megastar made headlines for a far less empowering reason: she had become the latest high-profile target of sexually explicit, nonconsensual deepfake images made using artificial intelligence. Swift’s fans were quick to report the violative content as it circulated on social media platforms, including X (formerly Twitter), which temporarily blocked searches of Swift’s name. It was hardly the first such case—women and girls across the globe have already faced similar abuse. Swift’s cachet helped propel the issue into the public eye, however, and the incident amplified calls for lawmakers to step in.

“We are too little, too late at this point, but we can still try to mitigate the disaster that’s emerging,” says Mary Anne Franks, a professor at George Washington University Law School and president of the Cyber Civil Rights Initiative. Women are “canaries in the coal mine” when it comes to the abuse of artificial intelligence, she adds. “It’s not just going to be the 14-year-old girl or Taylor Swift. It’s going to be politicians. It’s going to be world leaders. It’s going to be elections.”

On supporting science journalism

If you’re enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

Swift, who recently became a billionaire, might be able to make some progress through individual litigation, Franks says. (Swift’s record label did not respond to a request for comment as to whether the artist will be pursuing lawsuits or supporting efforts to crack down on deepfakes.) Yet what are really needed, the law professor adds, are regulations that specifically ban this sort of content. “If there had been legislation passed years ago, when advocates were saying this is what’s bound to happen with this kind of technology, we might not be in this position,” Franks says. One such bill that could help victims in the same position as Swift, she notes, is the Preventing Deepfakes of Intimate Images Act, which Representative Joe Morelle of New York State introduced last May. If it were to pass into law, the legislation would ban the sharing of nonconsensual deepfake pornography. Another recent proposal in the Senate would let deepfake victims sue such content’s creators and distributors for damages.

Advocates have been calling for policy solutions to nonconsensual deepfakes for years. A patchwork of state laws exist, yet experts say federal oversight is lacking. “There is a paucity of applicable federal law” around adult deepfake pornography, says Amir Ghavi, lead counsel on AI at the law firm Fried Frank. “There are some laws around the edges, but generally speaking, there is no direct deepfake federal statute.”

Yet a federal crackdown might not solve the issue, the attorney explains, because a law that criminalizes sexual deepfakes does not address one big problem: whom to charge with a crime. “It’s highly unlikely, practically speaking, that those people will identify themselves,” Ghavi says, noting that forensic studies can’t always prove what software created a given piece of content. And even if law enforcement could identify the images’ provenance, they might run up against something called Section 230—a small but massively influential piece of legislation that says websites aren’t responsible for what their users post. (It’s not yet clear, however, whether Section 230 applies to generative AI.) And human rights groups such as the American Civil Liberties Union have warned that overly broad regulations could also raise First Amendment concerns for the journalists who report on deepfakes or political satirists who wield them.

The neatest solution would be to adopt policies that would promote “social responsibility” on the part of companies that own generative AI products, says Michael Karanicolas, executive director of the University of California, Los Angeles, Institute for Technology, Law and Policy. But, he adds, “it’s relatively uncommon for companies to respond to anything other than coercive regulatory behavior.” Some platforms have taken steps to stanch the spread of AI-generated misinformation about electoral campaigns, so it’s not unprecedented for them to step in, Karanicolas says—but even technical safeguards are subject to end runs by sophisticated users.

Digital watermarks, which flag AI-generated content as synthetic, are one possible solution supported by the Biden administration and some members of Congress. And in the coming months, Facebook, Instagram and Threads will begin to label AI-made images posted to those platforms, Meta recently announced. Even if a standardized watermarking regime couldn’t stop individuals from creating deepfakes, it would still help social media platforms take them down or slow their spread. Moderating web content at this kind of scale is possible, says one former policy maker who regularly advises the White House and Congress on AI regulation, pointing at social media companies’ success in limiting the spread of copyrighted media. “Both the legal precedent and the technical precedent exist to slow the spread of this stuff,” says the adviser, who requested anonymity, given the ongoing deliberations around deepfakes. Swift—a public figure with a platform comparable to that of some presidents—could be able to get everyday people to start caring about the issue, the former policy maker adds.

For now, though, the legal terrain has few clear landmarks, leaving some victims feeling left out in the cold. Caryn Marjorie, a social media influencer and self-described “Swiftie,” who launched her own AI chatbot last year, says she faced an experience similar to Swift’s. About a month ago Marjorie’s fans tipped her off to sexually explicit, AI-generated deepfakes of her that were circulating online.

The deepfakes made Marjorie feel sick; she had trouble sleeping. But though she repeatedly reported the account that was posting the images, it remained online. “I didn’t get the same treatment as Taylor Swift,” Marjorie says. “It makes me wonder: Do women have to be as famous as Taylor Swift to get these explicit AI images to be taken down?”