Through analyzing 425 interactions with generative-AI bots like ChatGPT, Bing Chat, and Bard, we’ve discovered that conversations could involve many vague, underspecified prompts or few, razor-sharp ones. Why does this matter? First, different conversation types serve distinct information needs and demand varied UI designs. Second, there is no one optimal conversation length — both short and long conversations can be helpful, as they might support different user goals.

On This Page:

Our Research

In May and June 2023, we conducted a 2-week diary study involving 18 participants who used ChatGPT 4.0, Bard, and Bing Chat. Participants logged a total of 425 conversations and rated each for helpfulness and trustworthiness. At the end of the study, we conducted in-depth interviews with 14 participants.

The findings of this study are reported in several articles:

Types of Conversations

Several types of conversations emerged from our analysis:

- Search queries

- Funneling conversations

- Exploring conversations

- Chiseling conversations

- Expanding conversations

- Pinpointing conversations

Some conversations involved several prompts and reformulations, whereas others were relatively short.

In what follows, we discuss each of these 6 types of conversations; for each, we provide tips for both users and interface designers of generative AI chatbots.

Search Query

These conversations are one-prompt, simple queries that are not followed by any refinements. The prompt usually includes the question with no framing or format specification.

In our diary study, the intent behind search-query conversations was the same as for a web search: users were trying to locate a specific piece of information. These conversations were common when people were still trying to understand what the bots could do for them and what they were best at. In such situations, it is likely that users were simply transferring their search mental models to the AI bots.

Examples of search-query conversations from our diary study include:

- Rosie Odonnell

- Concert dates for Toby Keith

- What is a Chaffle?

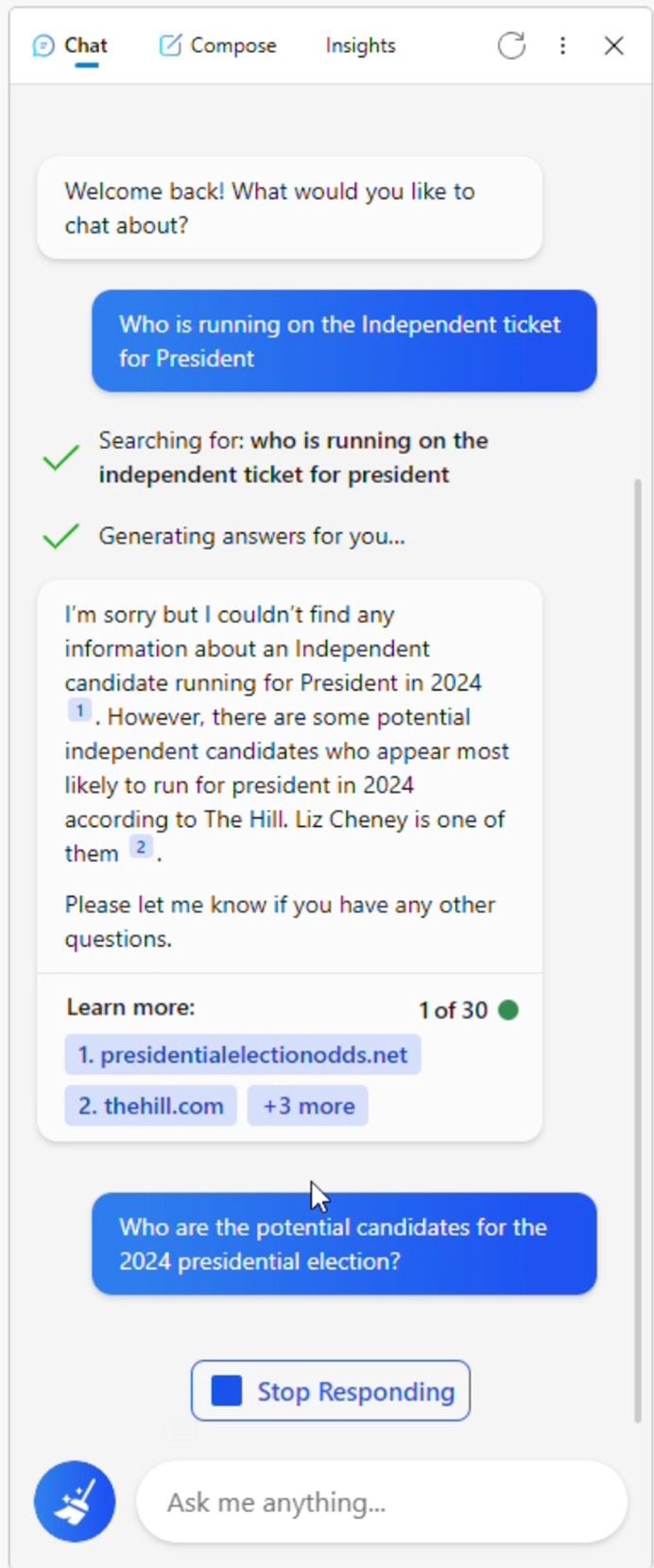

When these search-query conversations involved Bing, participants often clicked on the suggested links and visited the sites, as they normally would have done when using a search engine.

While short keyword phrases like Rosie Odonnell might work well for search, they lack enough context to help AI chatbots understand what the user is asking for. Sometimes users realized that a search engine would be better suited for such queries and quit the bot.

Both users and designers need to consider what the best tool is for a given information need. Sometimes, it could be that an AI chatbot won’t do a job as good as a search engine.

Tips for Users of AI Bots

Avoid using this type of prompt when working with AI bots like ChatGPT. While search engines perform best with keywords, AI bots need more context to understand what kind of response or output you’re looking for.

Search engines might (for now) give you as good if not better results as AI bots if:

- You are looking for an answer to a very specific, factual question about an event, a date, a location, or a person.

- You need to inspect a lot of options before selecting a particular one.

Tips for Designers of AI Bots

Consider allowing users to easily switch to a search-engine mode or access search-engine results, like Bard does.

Funneling Conversations

In funneling conversations, the user starts with an underspecified, sometimes too vague query and then narrows it down with subsequent questions that specify additional constraints. Often, the user doesn’t realize that they need to be more specific until they receive an unsatisfactory response from the bot.

For example, here is a sequence of queries asked by one user who was trying to find a recipe:

- i am looking for a appetizer to serve pool side on memorial day weekend. i do not want to use pork and i want something fairly easy to make that can sit outside

- how about an easier appetizer that can be passed around 30 people or so

- anything other than skewers? i was thinking some sort of dip?

If the user had anticipated all of these requirements, she could’ve achieved her desired result with a single prompt:

I want an easy appetizer recipe for 30 people to serve poolside on Memorial Day Weekend. I’m thinking of some sort of dip. I don’t want to use pork and the appetizer needs to be able to sit outside in the heat.

However, she likely did not consider this full set of constraints until she received the bot’s initial suggestions.

Funneling conversations are often long, as the user might need to input several prompts to refine their query. Sometimes they start with best-of prompts or other basic prompts that lack details. For example, here’s a sequence of prompts from a user interested in figuring out how to prevent her migraines:

- What are the best cures for a migraine?

- What do you think would be the most effective cure for a migraine?

- I experience migraines 2-3 times a week, is there a likely cause for this?

- I normally have 2-3 cups of coffee per day and on days when I don’t consume any, I usually get migraines. Can a lack of caffeine contribute to migraines?

- How many milligrams of caffeine are typically in a 12 oz cup of coffee?

Note that, in funneling conversations, the user’s information need is usually specific and well-defined, but poorly articulated. In other words, the user will likely recognize a correct response, but will not be able (or sometimes will not be bothered) to say what that correct response should look like. However, the bot can facilitate query articulation by asking the user helping questions.

In some of the conversations we studied, the bot did ask for clarification. For example, one participant started the conversation with Issue with swimming pool cleaner. ChatGPT asked for specific information about the cleaner and the issue, expediting the funneling process.

Tips for Using AI Bots

Consider explicitly telling the AI bot to ask helping questions to improve its output. For example, you may add phrasing such as Ask me questions if you need additional information, to get the bot to help you articulate the different constraints that you may be working with.

Tips for Designing AI Bots

To reduce the articulation load in funneling conversations, the bot should ask helping questions that narrow down underspecified queries.

Exploring Conversations

In exploring conversations, the user starts with a general, less-defined query (usually lacking context or output-format specifications) and then uses the AI to understand the information space and get ideas for new queries.

Exploring conversations differ from funneling conversations in that, in the beginning, the user is not able to formulate a specific enough query because they have a less-defined information need and lack the necessary vocabulary or knowledge (rather than because they did not spend the time to think of all the requirements for their information need). The bot’s responses help the user learn about the structure of the information space and give them new terminology and ideas about what to ask next.

An exploring conversation often feels like a conversation with a real teacher; the user acts as a student learning by acquiring depth into a topic and asking questions as they learn. The user is building queries based on the information received from the bot.

For example, one user asked What is the meaning of life? The bot talked about various kinds of views, including philosophical, religious, and absurdist views. The user then asked to know more about one of these — absurdism. When the bot mentioned the book “The Myth of Sisyphus” by Albert Camus, the user asked for other works by the same author.

In exploring conversations, users can be supported with suggested followup prompts that naturally build upon the information presented in the bot’s answer.

Tips for Designing AI Bots

If the user’s information need is broad and the bot’s response is complex, containing jargon and domain-specific facts or concepts, offer a list of suggested followup prompts that build upon these details.

The followup prompts can include:

- Definitions for domain-specific words (e.g., What is …?)

- Additional information about any of the facts or concepts included in the response (e.g., Why…?, What caused …?, How can one do …?)

However, detail-oriented suggested followup prompts do not need to be included in all conversations. Conversations with well-defined information needs (such as pinpointing conversations — see below) rarely benefit from such followup prompts. For example, a user rewriting her resume said:

if I’m asking you to put something in my resume, like, I do research in […] B-cell malignancies, I don’t necessarily need to ask ‘what are B-cell malignancies.’

Chiseling Conversations

In chiseling conversations, the user asks about different facets of the same topic, fleshing it out from a variety of angles like a sculptor chisels a sculpture from a piece of stone. Chiseling conversations cast a wide net over a topic to acquire breadth. They can feel like research on a specific topic, but the research is person-driven rather than driven by the bot’s answers.

Here is a sequence of questions asked in the same chiseling conversation about ADHD:

- What are some tips for people with ADHD to remember daily tasks more effectively?

- who are some famously successful people who have ADHD

- is ADHD considered a disability?

While these questions are all related to ADHD, they cover various aspects of ADHD and do not explore any one of these in depth. It feels as if the respondent is trying to learn many facts about the topic, without focusing on the logical relationship between these facts.

Another user was doing research on the company Insperity. She asked multiple questions related to different aspects of the company:

- What does insperity do?

- How does Insperity make money?

- What percentage of revenue comes from each area?

- How is Insperity’s revenue broken down? (reformulation of previous question)

- What are some goals of Insperity?

- What are executive priorities at Insperity?

- How is Insperity’s stock doing?

- Do you have access to analyst reports? for example analysts from Morgan Stanley?

Both exploring and chiseling conversations correspond to less-defined information needs and imply learning about a topic. But, while in exploring conversations, the learning involves acquiring depth into the topic, in chiseling conversations the learning is carried out through breadth.

Tips for Designing AI Bots

Like exploring conversations, chiseling conversations benefit from suggested followup queries. However, for chiseling conversations, suggested followup prompts should be broad, inquiring about multiple facets of the same topic or about related topics (e.g., How about…?).

In contrast, for exploring conversations, these prompts should go in depth, delving into details about a particular concept or fact in the answer.

Pinpointing Conversations

In pinpointing conversations, the user has a defined topic in mind and creates a very specific prompt from the very beginning. The prompt often includes context and a format specification.

Summer Cocktail Party: My wife and I are hosting a small cocktail and dinner party welcoming my daughter’s future in-laws for a visit to California in late July. The party will be indoors and outdoors by the pool, and we will be grilling something no doubt for the meal. It will be hot in late July so drinks that are refreshing would be order. I am not a professional bartender, but I have been studying “mixology” for the past year and do have all of the bar tools. I know how to make all of the classic cocktails. I would like a summer-themed cocktail menu of four to five drinks with clever names. I will put them on a framed menu on the counter in my outdoor kitchen and bar areas where I will make the drinks.

Sometimes the query might also include pasted text from another source or some other supporting documentation, like in the prompt below, which quoted a previous email chain (names were changed for privacy):

I would like to reach out to Bob to ask if he can do some part-time work for the […] school site visits for my department starting in August through the end of September. Here is some background from an email exchange last year:

You bet! We’ll keep you posted, Bob!

John Smith, Ed.D. Department Administrator College & Career | Leadership Support Services

From: Bob Jones

Date: Monday, September 12, 2022 at 9:50 AM

To: John Smith

Subject: Re: Thank you!

Thank you John! I enjoyed the time I was able to spend with school leaders and with Andrew. With the new requirements and the possibility of more schools added to the three year cohort I would be available next year if you think I could help. Thank you for your confidence!

Bob Jones

Sent from my iPhone

On Sep 12, 2022, at 9:38 AM, John Smith wrote:

Good Morning, Bob, I wanted to thank you for your support of the [school] visits this year. Your expertise and experience as a former district leader really benefited the process. Even with some new requirements, it was one of our smoothest years ever with your help! I hope you enjoyed this work, too, and will consider leading visits again next year if you’re available. Thanks again for lending us your time in this area!

John

Pinpointing conversations require great effort from the user, who needs to provide all the information necessary for a comprehensive response. They often contain well-specified, “engineered” prompts. However, unlike with other conversation types, the user rarely needs to spend time on query refinements and the result is a shorter, straight-to-the-point exchange.

Funneling conversations can sometimes be converted into pinpointing conversations if the bot asks the user detailed questions about their query, inviting them to specify all those details in their next prompt.

Tips for Designing AI Bots

Ask the user for specific details about their question, as well as about the format of the answer.

Consider giving users examples of the information they could provide in a prompt if the prompt is too vague or underspecified.

Expanding Conversations

The expanding conversation usually starts with a narrow topic that gets expanded, often because the results from the original prompt were unsatisfactory (e.g., not up to date) or because the user decides they need more detail.

For example, in the following sequence, the user expanded his original query twice.

|

Example of Expanded Conversation |

|

|---|---|

|

Start prompt |

|

|

Expanded prompt: |

|

|

Expanded prompt: |

|

When the expanding conversations involved Bing, the expansions were usually selected from the suggested followup queries.

Expanding conversations remind us of what happens when people get zero search results for a search query: they often try to remove one of the criteria of their query in the hope that they will get something good enough. In such situations, search facets have been shown to help people by guiding them to ask queries that have one or more results.

Similarly, in the context of expanding conversations with AI, suggested followup prompts can show the user how to expand their question to get a meaningful answer. However, such broader suggested followup prompts should have a different answer than the original question.

For instance, one study participant used Bing Chat to look for free events happening in Nashville this weekend. Unsatisfied with the response she received, she expanded her query using a suggested followup What are some popular free events in Nashville. Disappointingly, she found the results were very similar. She commented:

I used one of the suggested follow up questions as the chatbot was not providing very specific information regarding Nashville events. It yielded pretty much identical results to the question I had posed. While it was nice to have the prompts below, I feel that they should maybe pose newer information.

Tips for Designers of AI Bots

When the bot is not able to provide an answer to the user’s query, provide suggested followup prompts that relax some of the criteria in the user’s original question and return an answer.

Combinations of Several Types

Some conversations start as one type and then morph into a different one. For example, chiseling conversations may also have exploratory elements that build upon something that the bot has said.

One user had the following exchanges with ChatGPT; they combined chiseling and exploring conversations.

|

A Conversation Combining Chiseling and Exploring Prompts |

|

|---|---|

|

Chiseling prompts: |

|

|

|

|

|

|

Exploring prompt: |

|

|

Exploring prompt: |

|

|

Chiseling prompt: |

|

Conversation Length Is Not a Success Indicator

On average, a conversation in our diary study included 3.6 participant-generated prompts (95% confidence interval: 3.3–3.9); the median length was 3.

In general, the higher the number of attempts to do a task, the worse the usability. (For example, if the average number of submit attempts on a form was 3.6, that would mean that the form was pretty bad.)

However, with AI conversations, it is not the case that longer conversations necessarily represent more strenuous attempts at getting information from the bots. In fact, there was no correlation between the length of the conversation and its helpfulness or trustworthiness ratings, as collected from our study.

For certain types of conversations like funneling and pinpointing, the length indicates how well-articulated the initial prompt was. Long funneling chats start with vague prompts that need more detail. Short pinpointing chats begin with detailed prompts that already lay out all the requirements for an acceptable answer. In both such conversations, the user’s information need is constrained and fairly narrow — they need a specific piece of information or a specific output.

For exploring and chiseling conversations, length is part of the conversation’s nature and serves the user’s less well-defined information need. Exploring conversations require a back-and-forth between the bot and the human, with the human learning from the bot and choosing to go deeper and build upon the bot’s response. Chiseling conversations are also long because the goal is to acquire breadth in a subject, therefore requiring the user to ask multiple, loosely related questions about different aspects of given topic. In both these types of conversations, the goal is learning about a topic, and the conversation serves to define the user’s information need.

A special type of short conversation is the search query. This type of conversation was usually more likely to occur when people were still exploring the range of tasks that the AI bot could do for them.

Search queries were common with Bard; that is likely why conversations with Bard were shorter than conversations with ChatGPT (p <0.001) or Bing (p<0.001). It could be that Bard participants were primed by the prominence of Google as a search engine and tended to use Bard as a proxy for Google search.

A final word about the length of expanding conversations: they can be long or short, depending on how successful the user is in finding a satisfactory prompt that the bot can answer. They can also evolve in other kinds of conversations once the user finds the right way of formulating their query.

|

Taxonomy of AI Conversations by Information Need |

|

|---|---|

|

Narrow, Well-Defined Information Need |

Broad, Undefined Information Need |

|

Funneling Pinpointing Exploring Search queries |

Exploring conversations: acquiring depth of knowledge Chiseling conversations: acquiring breadth of knowledge |

Tips for Using Text-Based Generative-AI Bots

There is no ideal length for all conversations. If the user has a clear, well-defined information need, then a good, detailed, pinpointing prompt will get them what they need fast.

But if the user’s goal is broad and less well-defined, then a single exchange will likely not be enough. The user can use the bot’s responses to learn — either through breadth or depth.

- Specific information needs benefit from detailed, pinpointing prompts.

- Adding phrasing such as Ask me questions if you need additional information at the end of the user’s prompts can get the bot to help articulate the different constraints that the user may be working with.

- For factual queries, users may (for now) be better off using a search engine instead of generative AI.

Recommendations for Designing the UX of Generative AI

The structure of the generative-AI conversations is teaching us about what designers can do to improve the overall experience of generative AI and allow users to efficiently address their information needs.

Bots should attempt to use the length and the structure of the user’s prompt, as well as the complexity of the answer to determine the conversation type early in the exchange and adjust behavior accordingly. That is because different conversation types address different information needs and the support provided by the bot needs to be tailored to each need.

Specifically:

- Search queries (i.e., prompts that are short, often incomplete sentences) can indicate that users are inexperienced with AI. These conversations can be treated as funneling conversations; the user can be asked further questions so that the bot can provide a satisfactory response. Alternatively, the bot can also offer the user the option to access search results for the same question.

- When bots receive vague, underspecified prompts (as in funneling conversations), they should ask the user questions to help them narrow down the conversation topic. For example, for a prompt such as best Mother’s Day gifts, the bot can ask for specific information (e.g., age, hobbies, location) about the gift recipient. The bot can also offer example pinpointing prompts to help inexperienced users learn to use the AI.

- In broad conversations (such as exploring or chiseling conversations) that require long or complex and nuanced responses, suggested followup prompts should help the user learn. While it may be difficult to establish what kind of intent the user has from a first prompt, bots could offer both depth-focused and breadth-focused suggested followup prompts.

- In expanding conversations, which usually map onto situations when the bot was not able to provide an answer, the suggested followup prompts need to be slightly broader refinements that allow the user to find something useful, even if they will not precisely match their original need.