Summary:

Use methods like 5-second testing, first-click testing, and preference testing to gain insights into how users perceive your visual design.

Visual design is the first thing users notice when they encounter a digital product like a website or app. It plays a large role in capturing users’ attention, defining brand identity, and creating an emotional connection.

Designers often choose visual elements they believe effectively represent specific brand traits. However, since you and your team are not the users, it’s necessary to gather feedback directly from your target audience to assess the effectiveness of your designs. Aesthetic choices, branding, and the impact on user behavior can be assessed through various methods.

Visual-Design Testing Methods

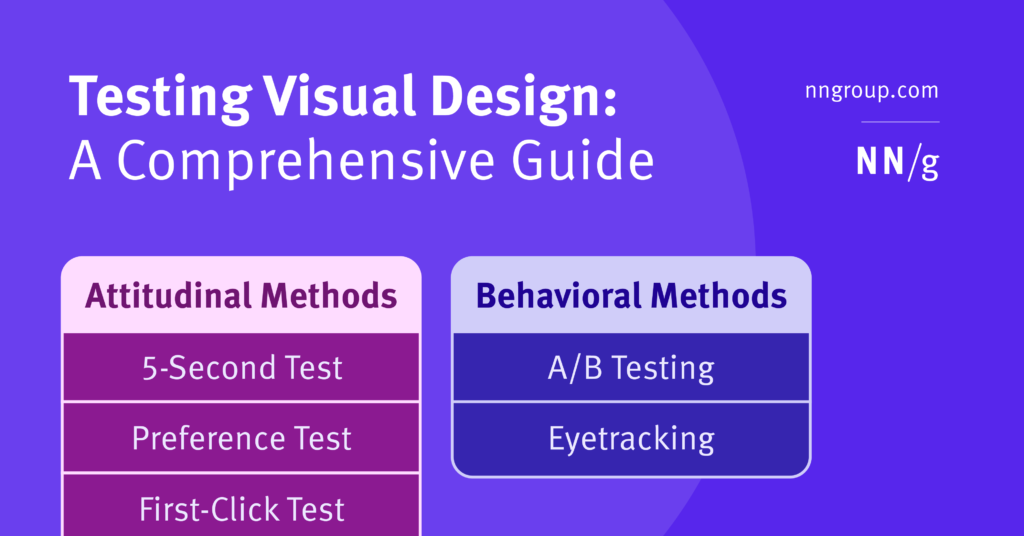

Instead of asking people whether they like a design, use a structured approach to test exactly what you want. Depending on your goals, choose between attitudinal and behavioral testing methods.

Attitudinal methods gather self-reported thoughts, feelings, and opinions from users. When applied to visual design, attitudinal methods help explore how users feel about a design’s aesthetics, tone, and style. These methods are ideal when your goal is to evaluate brand alignment or assess users’ first impressions.

Behavioral testing methods, such as A/B testing and eyetracking studies, help you observe how users interact with your product. When applied to visual design, these methods help assess how design elements influence users’ ability to complete tasks and navigate the system. This approach requires the researcher to have a strong understanding of the specific user behaviors and actions that may indicate visual-design issues. If your goal is to evaluate the impact of visual design on task success or usability, behavioral testing is more appropriate.

This article focuses primarily on attitudinal methods, as they offer a direct and robust way to assess whether your visual design aligns with users’ perceptions and your brand identity.

Attitudinal Testing Methods for Visual Design

Attitudinal testing methods for visual design help you understand users’ preferences and perceptions at the beginning of the design process so you can implement changes before the design is released. By assessing users’ attitudes towards a design, you can gauge whether your design elements, such as color schemes, typography, imagery, and layout, align with your brand identity.

Attitudinal approaches involve answering the following two questions:

- How will you expose users to the design? To decide on a testing method, you must identify the number of designs you want to test, how long you’ll show each to participants, and how much your participants will interact with the designs.

- How will you assess users’ reactions? Use either open-ended or strictly controlled questions to measure users’ reactions to the design.

1. How Will Expose Users to the Design?

Attitudinal methods make use of static images and require minimal or no interaction with the product. If you’re hoping to gauge users’ initial reactions to visual elements and are not concerned with the effects that visual-design choices have on behavior and usability, you can choose between the following common attitudinal visual-design testing methods.

- 5-second test

- First-click test

- Preference testing

- Asking visual design questions after usability testing

5-Second Test

A 5-second test is a first-impression test, in which you show the stimulus for 5 seconds (or for another short period of time) to capture people’s “gut reaction” or first impression of a website or design. Five seconds of viewing time is too short for reading copy or noticing details like specific fonts, but it is enough to formulate an impression of the visual style. A strong first impression can build user trust and interest.

When conducting a 5-second test, avoid warning participants that the design will be shown for only five seconds. This helps capture participants’ authentic initial reactions without priming them to overanalyze or prepare to memorize the details of the design.

First-Click Test

First-click tests are ideal for interfaces where users are likely to have a specific goal in mind when they visit your site.

First-click tests tell you if users can accurately find what they need on your website. To start, give participants a specific task (such as Find out if this website sells candles). Then, stop the participant after they click the location on the screen where they expect to find that information.

Most users will spend only a few seconds on this type of test and will likely not review the whole page. Instead, they will search for a specific feature or link that looks like the most actionable place to achieve their goal, and the rest will be viewed peripherally.

Preference Testing

Preference testing involves showing participants two to three design variations and asking them to choose which one they prefer.

When asking a user to evaluate different versions of the same design, the differences must be significant enough to be immediately detectable to a nondesigner. Small changes, such as minor variations in font sizes or substitutions of similar fonts, may be obvious to a visual designer but are often undetectable to the average user. Asking people to identify and evaluate these subtle details will most likely just confuse participants and waste your time. (Even worse, you may fall prey to the query effect, where users make up an answer even though they don’t really feel differently about the two overly similar versions.)

Additionally, limit the number of elements changed between each version. For example, rather than changing everything, adjust only each version’s layout while keeping images, colors, and icons the same. This approach helps you draw clear conclusions and identify which elements have the greatest impact.

Remember, users’ responses may be influenced by which version of the design they see first. For example, if one version is easier to understand, those who see that version first will have learned about the content and will be less confused by subsequent variations. For this reason, you should counterbalance or randomize the order in which you present design variations to your participants.

Asking Visual Design Questions After Usability Testing

Usability testing is a behavioral method that evaluates the usability of a design. Typically, visual-design tests and usability tests are conducted separately. However, if you have time or resources for only one study, add an attitudinal component to your usability test by including questions meant to assess the effects of visual design.

It’s important to present behavioral tasks first and aesthetic assessments afterward. This is because asking someone’s opinion about the visual design at the beginning of the session risks biasing the behavioral tasks portion of the study. For example, if users have seen multiple versions and picked a “favorite,” they are likely to ignore or minimize any problems they experience with their “favorite” version throughout the rest of the session.

Unlike purely attitudinal methods, users’ answers won’t be based exclusively on visual impressions, but the impression formed from the combination of visuals, content, and interaction can be closer to how users react in the real world.

2. How Will You Assess Users’ Reactions?

Participants’ perceptions of design aesthetics can vary widely and will need to be analyzed to identify meaningful trends. You can ask them to give feedback on a visual design in four ways, listed from least to most structured:

- Open-ended preference explanation

- Open word choice

- Closed word choice

- Numerical rating scale

Open-Ended Preference Explanation

This method involves asking people to explain why they like or dislike a design. The following questions can be used to learn more about participants’ impressions of the visuals:

- How would you describe this page?

- Which version of the design do you prefer? Why?

- Do you find this design to be more [Insert opposite traits, such as exciting or boring]?

This assessment method casts the broadest net and can be useful if you do not know much about your audience’s expectations and want to discover what matters to them. It can also identify and screen out random, purely subjective preferences (such as “I like purple”). The drawback of this approach is that unmotivated participants may offer only brief or irrelevant responses. For that reason, this method is especially risky in an unmoderated remote setting since you will not be able to ask followup questions if someone gives a vague response like “It’s nice.”

Open Word Choice

A slightly more structured approach to assessing user perceptions is to ask test participants to list several words that describe the design. This format ensures that you receive specific feedback while still keeping the question open-ended, which allows you to discover new factors that are important to your audience.

You may get a wide range of descriptors back and will need to analyze them carefully to identify meaningful themes. A good approach for this analysis is to categorize terms as generally positive, negative, or neutral, then group words with similar meanings and evaluate whether they match your target brand attributes.

For example, the table below shows descriptors provided about a business-to-business website whose brand goal was to be trustworthy, contemporary, and helpful. None of these words were mentioned by the study participants as descriptors, but many users described the design as simple (with both positive and negative connotations).

| Positive | Neutral | Negative |

|---|---|---|

|

simple simple, bold professional, neat corporate elegant human |

sober tripartite 3 parts |

bland bland, typical, safe too simple simple, generic plain basic dated too much information clinical |

Open-ended word-choice questions elicit a broad range of descriptors, which must be analyzed to determine whether they effectively express the desired brand traits.

Closed Word Choice

Closed word choice, also known as desirability testing, requires users to choose descriptors from a list of terms. Providing users with a limited set of words allows you to focus on whether participants pick up the target brand attributes. The brand traits you hope to convey should be included in your list of terms, along with other distractor choices that describe other possible attributes, as well as contradictory, negative qualities.

Closed word choice makes analysis easier by allowing you to compare multiple design versions or reactions from different audience groups. The drawback of this approach is that it provides fewer opportunities than open-word choice to discover new points of view.

In the example below, an ecommerce store identifies a set of ideal brand traits alongside their opposites (e.g., engaging, boring). These traits are compiled into a word bank along with other distractor choices and presented to participants, who are then asked to choose words that best describe the brand or design.

This technique works well in a moderated setting, where you can ask participants followup questions and allow them to refer to the design as they explain their reasoning for selecting each term. When conducting your session, randomize the list of words for each participant to account for those who do not read the whole list, and just select the first couple.

Also, it is best to allow participants to see the design as they choose words from the list. Thus, combining a closed word choice with a 5-second test is not recommended because, by the time participants reach the end of a long list of words, they might not remember much about a design they saw for only 5 seconds.

Numerical Rating Scale

The most structured approach is to collect numerical ratings of how well each brand trait is expressed by the design. Pick three to five important brand qualities and ask people to rate how well each of them is captured by the design. Short and easy questionnaires are ideal. The longer the questionnaire, the higher the chance of random answers.

This paradigm limits the ability to discover new perspectives and reactions, making numerical ratings appropriate only if you’ve figured out the most common perceptions in previous research and simply want to assess the relative strength of each quality. This technique collects a quantitative metric (ratings) and requires a larger number of participants; the results should be analyzed using quantitative techniques.

Comparison of Methods for Assessing User Feedback

|

|

Open-Ended Preference Explanation |

Open Word Choice |

Closed Word Choice |

Numerical Rating Scale |

|---|---|---|---|---|

|

Description |

Participants explain why they like or dislike the design in their own words.

|

Participants come up with words that describe the design. |

Participants choose words from a predefined list to describe the design. |

Participants rate the design’s characteristics on a numerical scale.

|

|

Pros

|

Detailed feedback, good for discovering what matters to users |

Specific, but not overly constrained feedback |

Easier to compare across participants or designs |

Easy to collect and analyze data |

|

Cons

|

Irrelevant or vague responses, especially in unmoderated settings

|

Requires careful analysis to identify themes |

|

|

|

Environment All are suitable for in-person or remote settings |

Moderated sessions where followup questions can be asked |

Moderated sessions where followup questions can be asked |

Moderated sessions where followup questions can be asked |

Ideal for unmoderated studies or when quantitative data is prioritized |

Behavioral Testing Methods

Visual design is part of a holistic experience that also includes content and interaction. Therefore, the impact of visual design may become evident only after people start using a system.

To understand the design’s long-term impact, use behavioral testing methods to determine how a visual design affects users’ behavior and task success. For example, the effect of increased header-font size on a webpage might not stand out at first glance, but upon testing, you may find that it helps users scan long-form content more easily. When users can quickly find relevant information, they are likely to have a positive experience with the site.

Eyetracking

By tracking where users look and for how long, you can gather data on which elements of your design draw attention. This method provides insights into the effectiveness of your layout, the visibility of key features, and how well your design guides users through the intended path. For example, if an important call-to-action button isn’t attracting attention, you may need to adjust its size, color, or placement. Eyetracking visualizations, like gaze plots and heat maps, can help you refine your visual design to ensure important elements are noticed.

A/B Testing

A/B testing allows you to compare two versions of a design to determine which one performs better in terms of engagement, conversion rates, or other metrics you may be interested in. When it comes to visual design, A/B testing can be used to evaluate how different design elements, like color scheme, typography, or layout, influence behavior. For example, you might test two different button styles to see which one gathers more clicks.

Conclusion

Since you are not the user, what looks good to you and your team might not resonate with your audience. Visual-design testing guides you in gathering feedback to ensure your design aligns with users’ preferences, your brand identity, and your business goals.